Malware authors have lifted a page from the legit software industry's rule book and are slapping copyright notices on their Trojans.

One Russian-based outfit has claimed violations of its "licensing agreement" by its underworld customers will result in samples of the knock-off code being sent to anti-virus firms.

The sanction was spotted in the help files of a malware package called Zeus, detected by security firm Symantec as "Infostealer Banker-C". Zeus is offered for sale on the digital underground, and its creators want to protect their revenue stream by making the creation of knock-offs less lucrative.

The copyright notice, a reflection of a lack of trust between virus creators and their customers, is designed to prevent the malware from being freely distributed after its initial purchase. There's no restriction on the number of machines miscreants might use the original malware to infect.

Virus writers are essentially relying on security firms to help them get around the problem that miscreants who buy their code to steal online banking credentials have few scruples about ripping it off and selling it on.

In a blog posting, Symantec security researchers have posted screen shots illustrating the "licensing agreement" for Infostealer Banker-C.

The terms of this licensing agreement demands clients promise not to distribute the code to others, and pay a fee for any update to the product that doesn't involve a bug fix. Reverse engineering of the malware code is also verboten.

"These are typical restrictions that could be applied to any software product, legitimate or not," writes Symantec researcher Liam O'Murchu, adding that the most noteworthy section deals with sanctions for producing knock-off code (translation below).

In cases of violations of the agreement and being detected, the client loses any technical support. Moreover, the binary code of your bot will be immediately sent to antivirus companies.

Despite the warning copies of the malware were traded freely on the digital underground days after its release, Symantec reports. "It just goes to show you just can’t trust anyone in the underground these days," O'Murchu notes

Monday, May 5, 2008

Friday, May 2, 2008

After Long time !!!

Just thought of writing a BASIC program after almost 15 years !!!

AUTO

10 REM --- Hi BASIC ---

20 PRINT "HELLO WORLD! "

30 END

AUTO

10 REM -- I STILL REMEMBER YOU ---

20 PRINT "What is your name"

30 INPUT NAME$

40 PRINT "What is your age"

50 INPUT AGE

60 PRINT "What is date you born. Enter only the date"

70 INPUT DATE

80 PRINT "What is month you born. Enter only the month"

90 INPUT MONTH

100 DIFF = 2008 - AGE

110 NDIFF = DIFF

120 NUM = 1

130 IF MONTH < 5 THEN GOTO 140 ELSE GOTO 210

140 FOR I = DIFF TO 2008 STEP 1

150 PRINT "Your "; NUM ; " birthday was on "; DATE ;"/"; MONTH ;"/"; NDIFF

160 NDIFF = NDIFF + 1

170 NUM = NUM + 1

180 NEXT I

200 GOTO 270

210 IF MONTH > 4 THEN GOTO 220 ELSE GOTO 270

220 FOR I = DIFF TO 2007 STEP 1

230 PRINT "Your "; NUM ; " birthday was on "; DATE ;"/"; MONTH ;"/"; NDIFF

240 NDIFF = NDIFF + 1

250 NUM = NUM + 1

260 NEXT I

270 PRINT "Goodbye BASIC. I will love you forever !!!"

280 REM --- End ---

290 STOP

AUTO

10 REM --- Hi BASIC ---

20 PRINT "HELLO WORLD! "

30 END

AUTO

10 REM -- I STILL REMEMBER YOU ---

20 PRINT "What is your name"

30 INPUT NAME$

40 PRINT "What is your age"

50 INPUT AGE

60 PRINT "What is date you born. Enter only the date"

70 INPUT DATE

80 PRINT "What is month you born. Enter only the month"

90 INPUT MONTH

100 DIFF = 2008 - AGE

110 NDIFF = DIFF

120 NUM = 1

130 IF MONTH < 5 THEN GOTO 140 ELSE GOTO 210

140 FOR I = DIFF TO 2008 STEP 1

150 PRINT "Your "; NUM ; " birthday was on "; DATE ;"/"; MONTH ;"/"; NDIFF

160 NDIFF = NDIFF + 1

170 NUM = NUM + 1

180 NEXT I

200 GOTO 270

210 IF MONTH > 4 THEN GOTO 220 ELSE GOTO 270

220 FOR I = DIFF TO 2007 STEP 1

230 PRINT "Your "; NUM ; " birthday was on "; DATE ;"/"; MONTH ;"/"; NDIFF

240 NDIFF = NDIFF + 1

250 NUM = NUM + 1

260 NEXT I

270 PRINT "Goodbye BASIC. I will love you forever !!!"

280 REM --- End ---

290 STOP

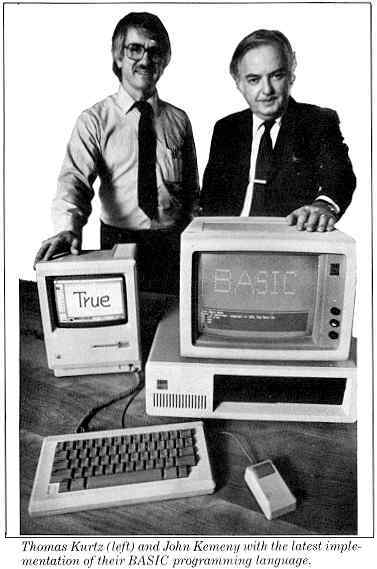

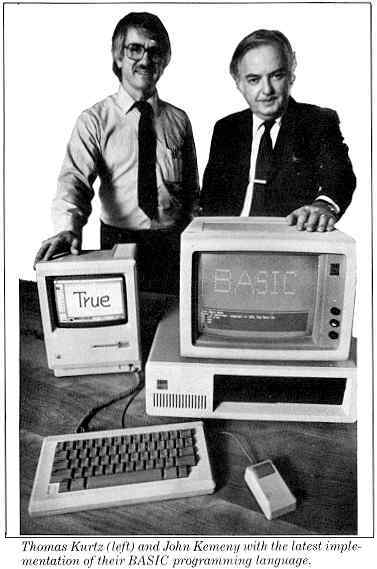

May 1, 1964: First Basic Program Runs

1964: In the predawn hours of May Day, two professors at Dartmouth College run the first program in their new language, Basic.

Mathematicians John G. Kemeny and Thomas E. Kurtz had been trying to make computing more accessible to their undergraduate students. One problem was that available computing languages like Fortran and Algol were so complex that you really had to be a professional to use them.

So the two professors started writing easy-to-use programming languages in 1956. First came Dartmouth Simplified Code, or Darsimco. Next was the Dartmouth Oversimplified Programming Experiment, or Dope, which was too simple to be of much use. But Kemeny and Kurtz used what they learned to craft the Beginner's All-Purpose Symbolic Instruction Code, or Basic, starting in 1963.

The college's General Electric GE-225 mainframe started running a Basic compiler at 4 a.m. on May 1, 1964. The new language was simple enough to use, and powerful enough to make it desirable. Students weren't the only ones who liked Basic, Kurtz wrote: "It turned out that easy-to-learn-and-use was also a good idea for faculty members, staff members and everyone else."

And it's not just for mainframes. Paul Allen and Bill Gates adapted it for personal computers in 1975, and it's still widely used today to teach programming and as a, well, basic language. (Reacting to the proliferation of complex Basic variants, Kemeny and Kurtz formed a company in the 1980s to develop True BASIC, a lean version that meets ANSI and ISO standards.)

The other problem Kemeny and Kurtz attacked was batch-processing, which made for long waits between the successive runs of a debugging process. Building on work by Fernando Corbató, they completed the Dartmouth Time Sharing System, or DTSS, later in 1964. Like Basic, it revolutionized computing.

Ever the innovator, Kemeny served as president of Dartmouth, 1970-81, introducing coeducation to the school in 1972 after more than two centuries of all-male enrollment.

Mathematicians John G. Kemeny and Thomas E. Kurtz had been trying to make computing more accessible to their undergraduate students. One problem was that available computing languages like Fortran and Algol were so complex that you really had to be a professional to use them.

So the two professors started writing easy-to-use programming languages in 1956. First came Dartmouth Simplified Code, or Darsimco. Next was the Dartmouth Oversimplified Programming Experiment, or Dope, which was too simple to be of much use. But Kemeny and Kurtz used what they learned to craft the Beginner's All-Purpose Symbolic Instruction Code, or Basic, starting in 1963.

The college's General Electric GE-225 mainframe started running a Basic compiler at 4 a.m. on May 1, 1964. The new language was simple enough to use, and powerful enough to make it desirable. Students weren't the only ones who liked Basic, Kurtz wrote: "It turned out that easy-to-learn-and-use was also a good idea for faculty members, staff members and everyone else."

And it's not just for mainframes. Paul Allen and Bill Gates adapted it for personal computers in 1975, and it's still widely used today to teach programming and as a, well, basic language. (Reacting to the proliferation of complex Basic variants, Kemeny and Kurtz formed a company in the 1980s to develop True BASIC, a lean version that meets ANSI and ISO standards.)

The other problem Kemeny and Kurtz attacked was batch-processing, which made for long waits between the successive runs of a debugging process. Building on work by Fernando Corbató, they completed the Dartmouth Time Sharing System, or DTSS, later in 1964. Like Basic, it revolutionized computing.

Ever the innovator, Kemeny served as president of Dartmouth, 1970-81, introducing coeducation to the school in 1972 after more than two centuries of all-male enrollment.

Monday, April 28, 2008

The Race to Zero

The Race to Zero contest is being held during Defcon 16 at the Riviera Hotel in Las Vegas, 8-10 August 2008.

The event involves contestants being given a sample set of viruses and malcode to modify and upload through the contest portal. The portal passes the modified samples through a number of antivirus engines and determines if the sample is a known threat. The first team or individual to pass their sample past all antivirus engines undetected wins that round. Each round increases in complexity as the contest progresses.

There are a number of key ideas we want to get across by running this event:

1. Reverse engineering and code analysis is fun.

2. Not all antivirus is equal, some products are far easier to circumvent than others. Poorly performing antivirus vendors should be called out.

3. The majority of the signature-based antivirus products can be easily circumvented with a minimal amount of effort.

4. The time taken to modify a piece of known malware to circumvent a good proportion of scanners is disproportionate to the costs of antivirus protection and the losses resulting from the trust placed in it.

5. Signature-based antivirus is dead, people need to look to heuristic, statistical and behaviour based techniques to identify emerging threats

6. Antivirus is just part of the larger picture, you need to look at controlling your endpoint devcies with patching, firewalling and sound security policies to remain virus free.

We are not creating new viruses and modified samples will not be released into the wild, contrary to the belief of some media organisations

Above all we want the contestants to have fun!

The event involves contestants being given a sample set of viruses and malcode to modify and upload through the contest portal. The portal passes the modified samples through a number of antivirus engines and determines if the sample is a known threat. The first team or individual to pass their sample past all antivirus engines undetected wins that round. Each round increases in complexity as the contest progresses.

There are a number of key ideas we want to get across by running this event:

1. Reverse engineering and code analysis is fun.

2. Not all antivirus is equal, some products are far easier to circumvent than others. Poorly performing antivirus vendors should be called out.

3. The majority of the signature-based antivirus products can be easily circumvented with a minimal amount of effort.

4. The time taken to modify a piece of known malware to circumvent a good proportion of scanners is disproportionate to the costs of antivirus protection and the losses resulting from the trust placed in it.

5. Signature-based antivirus is dead, people need to look to heuristic, statistical and behaviour based techniques to identify emerging threats

6. Antivirus is just part of the larger picture, you need to look at controlling your endpoint devcies with patching, firewalling and sound security policies to remain virus free.

We are not creating new viruses and modified samples will not be released into the wild, contrary to the belief of some media organisations

Above all we want the contestants to have fun!

Wednesday, April 16, 2008

Not so different

The following are programs written in Ada, C and Java that print to the screen the phrase "Hello World."

ADA PROGRAMMING LANGUAGE

with Ada.Text_IO;

procedure Hello_World is

begin

Ada.Text_IO.Put_Line ("Hello World>br>from Ada");

end Hello_World;

C PROGRAMMING LANGUAGE

#include < stdio.h>

void main()

{

printf("\nHello World\n");

}

JAVA PROGRAMMING LANGUAGE

class helloworldjavaprogram

{

public static void main(String args[])

{

System.out.println("Hello World!");

}

}

ADA PROGRAMMING LANGUAGE

with Ada.Text_IO;

procedure Hello_World is

begin

Ada.Text_IO.Put_Line ("Hello World>br>from Ada");

end Hello_World;

C PROGRAMMING LANGUAGE

#include < stdio.h>

void main()

{

printf("\nHello World\n");

}

JAVA PROGRAMMING LANGUAGE

class helloworldjavaprogram

{

public static void main(String args[])

{

System.out.println("Hello World!");

}

}

The return of ADA

Last fall, contractor Lockheed Martin delivered an update to the Federal Aviation Administration’s next-generation flight data air traffic control system — ahead of schedule and under budget, which is something you don’t often hear about in government circles.

The project, dubbed the En Route Automation Modernization System (ERAM), involved writing more than 1.2 million lines of code and had been labeled by the Government Accountability Office as a high-risk effort. GAO worried that many bugs in the program would appear, which would delay operations and drive up development costs.

Although the project’s success can be attributed to a lot of factors, Jeff O’Leary, an FAA software development and acquisition manager who oversaw ERAM, attributed at least part of it to the use of the Ada programming language.

About half the code in the system is Ada, O’Leary said, and it provided a controlled environment that allowed programmers to develop secure, solid code.

Today, when most people refer to Ada, it’s usually as a cautionary tale. The Defense Department commissioned the programming language in the late 1970s.

The idea was that mandating its use across all the services would stem the proliferation of many programming languages and even a greater number of dialects. Despite the mandate, few programmers used Ada, and the mandate was dropped in 1997. Developers and engineers claimed it was difficult to use.

Military developers stuck with the venerable C programming language they knew well, or they moved to the up-and-coming C++. A few years later, Java took hold, as did Web application languages such as JavaScript.

However, Ada never vanished completely. In fact, in certain communities, notably aviation software, it has remained the programming language of choice.

“It’s interesting that people think that Ada has gone away. In this industry, there is a technology du jour. And people assume things disappear.

But especially in the Defense Department, nothing ever disappears,” said Robert Dewar, president of AdaCore and a professor emeritus of computer science at New York University.

Dewar has been working with Ada since 1980.

Last fall, the faithful gathered at the annual SIGAda 2007 conference in Fairfax, Va., where O’Leary and others spoke about Ada’s promise.

This decades-old language can solve a few of today’s most pressing problems — most notably security and reliability.

“We’re seeing a resurgence of interest,” Dewar said. “I think people are beginning to realize that C++ is not the world’s best choice for critical code.”

Tough requirements

ERAM is the latest component in a multi-decade plan to upgrade the country’s air traffic control system. Not surprisingly, the system had some pretty stringent development requirements, O’Leary said.

The system could never lose data. It had to be fault-tolerant. It had to be easily upgraded. It had to allow for continuous monitoring. Programs had to be able to recover from a crash. And the code that runs the system must “be provably and test-ably free” of errors, O’Leary said.

And such testing should reveal when errors occur and when the correct procedures fail to occur. “If I get packet 218, but not 217, it would request 217 again,” he said.

Ada can offer assistance to programmers with many of these tasks, even if it does require more work on the part of the programmer.

“The thing people have always said about Ada is that it is hard to get a program by the compiler, but once you did, it would always work,” Dewar said. “The compiler is checking a lot of stuff. Unlike a C program, where the C compiler will accept pretty much anything and then you have to fight off the bugs in the debugger, many of the problems in Ada are found by the compiler.”

That stringency causes more work for programmers, but it will also make the code more secure, Ada enthusiasts say.

When DOD commissioned the language in 1977 from the French Bull Co., it required that it have lots of checks to ensure the code did what the programmer intended, and nothing more or less.

For instance, unlike many modern languages and even traditional ones such as C and C++, Ada has a feature called strong typing. This means that for every variable a programmer declares, he or she must also specify a range of all possible inputs. If the range entered is 1- 100, for instance, and the number 102 is entered, then the program won’t accept that data.

This ensures that a malicious hacker can’t enter a long string of characters as part of a buffer overflow attack or that a wrong value won’t later crash the program.

Ada allows developers to prove security properties about programs. For instance, a programmer might want to prove that a variable is not altered while it is being used through the program. Ada is also friendly to static analysis tools. Static analysis looks at the program flow to ensure odd things aren’t taking place — such as making sure the program always calls a certain function with the same number of arguments. “There is nothing in C that stops a program from doing that,” Dewar said. “In Ada, it is impossible.”

Ada was not perfect for the ERAM job, O’Leary said. There are more than a few things that are still needed. One is better analysis tools.

“We’re not exploiting the data” to the full extent that it could be used, he said. The component interfaces could be better. There should also be tools for automatic code generation and better cross-language support.

Nonetheless, many observers believe the basics of Ada are in place for wider use.

Use cases Who uses Ada? Not surprisingly, DOD still uses the language, particularly for command and control systems, Dewar said. About half of AdaCore’s sales are to DOD. AdaCore offers an integrated developer environment called GnatPro, and an Ada compiler.

“There [are] tens of millions of lines of Ada in Defense programs,” Dewar said.

NASA and avionics hardware manufacturers are also heavy users of Ada, he said. Anything mission-critical would be suitable for Ada. For instance, embedded systems in the Boeing 777 and 787 run Ada code.

In all these cases, the component manufacturers are “interested in highly reliable mission- critical programs. And that is the niche that Ada has found its way into,” Dewar said.

In addition to AdaCore, IBM Rational and Green Hills Software offer Ada developer environments.

It also works well as a teaching language. The Air Force Academy found it to be a good language that inexperienced programmers could use to build robust programs. At the SigAda conference, instructor Leemon Baird III showed how a student used Ada to build an artificial- intelligence function for a computer to play a game called Connect4 against human opponents.

“A great part of his success was due to Ada’s features,” Baird said.

Although it was only 2,000 lines, the language allowed the student to write robust code.

“It had to be correct,” he said. The code flowed easily between Solaris and Windows, and could be run across different types of processors with minimal porting.

Programs written in an extension of Ada, called Spark, will be used to run the next generation U.K. ground station air traffic control system, called Interim Future Area Control Tools Support (IFacts).

Praxis, a U.K. systems engineering company, is providing the operating code ---for IFacts. In 2002, England’s busiest airport terminal, London Heathrow Airport, suffered a software-based breakdown of its airplane routing system.

Praxis is under a lot of pressure to ensure its code is free from defects.

Praxis also used Spark for a 2006 National Security Agency-funded project, called the Tokeneer ID Station, said Rod Chapman, an engineer at Praxis. The idea was to create software that would meet the Common Criteria requirements for Evaluation Assurance Level 5, a process long thought to be too challenging for commercial software.

To do this, the software code that was generated had to have a low number of errors. The program itself was access control software.

Someone wishing to gain entry to a secure facility and use a workstation would need the proper smart card and provide a fingerprint.

By using Spark, a static check was made of the software before it was run, to ensure all the possible conditions led to valid outcomes. In more than 9,939 lines of code, no defects were found after the testing and remediation process was completed.

Although the original language leaned heavily toward strong typing and provability, subsequent iterations have kept Ada modernized, Dewar said. Ada 95 added object-oriented programming capabilities, and Ada 2005 tamped down on security requirements even further. The language has also been ratified as a standard by the American National Standards Institute and by the International Organization of Standards (ISO/IEC 8652).

Ada was named for Augusta Ada King, Countess of Lovelace, daughter of Lord Byron.

In the early 19th century, she published what is considered by most to be the world’s first computer program, to be run on a prototype of a computer designed by Charles Babbage, called the Analytical Engine. But don’t let the language’s historical legacy fool you — it might be just the thing to answer tomorrow’s security and reliability challenges.

The project, dubbed the En Route Automation Modernization System (ERAM), involved writing more than 1.2 million lines of code and had been labeled by the Government Accountability Office as a high-risk effort. GAO worried that many bugs in the program would appear, which would delay operations and drive up development costs.

Although the project’s success can be attributed to a lot of factors, Jeff O’Leary, an FAA software development and acquisition manager who oversaw ERAM, attributed at least part of it to the use of the Ada programming language.

About half the code in the system is Ada, O’Leary said, and it provided a controlled environment that allowed programmers to develop secure, solid code.

Today, when most people refer to Ada, it’s usually as a cautionary tale. The Defense Department commissioned the programming language in the late 1970s.

The idea was that mandating its use across all the services would stem the proliferation of many programming languages and even a greater number of dialects. Despite the mandate, few programmers used Ada, and the mandate was dropped in 1997. Developers and engineers claimed it was difficult to use.

Military developers stuck with the venerable C programming language they knew well, or they moved to the up-and-coming C++. A few years later, Java took hold, as did Web application languages such as JavaScript.

However, Ada never vanished completely. In fact, in certain communities, notably aviation software, it has remained the programming language of choice.

“It’s interesting that people think that Ada has gone away. In this industry, there is a technology du jour. And people assume things disappear.

But especially in the Defense Department, nothing ever disappears,” said Robert Dewar, president of AdaCore and a professor emeritus of computer science at New York University.

Dewar has been working with Ada since 1980.

Last fall, the faithful gathered at the annual SIGAda 2007 conference in Fairfax, Va., where O’Leary and others spoke about Ada’s promise.

This decades-old language can solve a few of today’s most pressing problems — most notably security and reliability.

“We’re seeing a resurgence of interest,” Dewar said. “I think people are beginning to realize that C++ is not the world’s best choice for critical code.”

Tough requirements

ERAM is the latest component in a multi-decade plan to upgrade the country’s air traffic control system. Not surprisingly, the system had some pretty stringent development requirements, O’Leary said.

The system could never lose data. It had to be fault-tolerant. It had to be easily upgraded. It had to allow for continuous monitoring. Programs had to be able to recover from a crash. And the code that runs the system must “be provably and test-ably free” of errors, O’Leary said.

And such testing should reveal when errors occur and when the correct procedures fail to occur. “If I get packet 218, but not 217, it would request 217 again,” he said.

Ada can offer assistance to programmers with many of these tasks, even if it does require more work on the part of the programmer.

“The thing people have always said about Ada is that it is hard to get a program by the compiler, but once you did, it would always work,” Dewar said. “The compiler is checking a lot of stuff. Unlike a C program, where the C compiler will accept pretty much anything and then you have to fight off the bugs in the debugger, many of the problems in Ada are found by the compiler.”

That stringency causes more work for programmers, but it will also make the code more secure, Ada enthusiasts say.

When DOD commissioned the language in 1977 from the French Bull Co., it required that it have lots of checks to ensure the code did what the programmer intended, and nothing more or less.

For instance, unlike many modern languages and even traditional ones such as C and C++, Ada has a feature called strong typing. This means that for every variable a programmer declares, he or she must also specify a range of all possible inputs. If the range entered is 1- 100, for instance, and the number 102 is entered, then the program won’t accept that data.

This ensures that a malicious hacker can’t enter a long string of characters as part of a buffer overflow attack or that a wrong value won’t later crash the program.

Ada allows developers to prove security properties about programs. For instance, a programmer might want to prove that a variable is not altered while it is being used through the program. Ada is also friendly to static analysis tools. Static analysis looks at the program flow to ensure odd things aren’t taking place — such as making sure the program always calls a certain function with the same number of arguments. “There is nothing in C that stops a program from doing that,” Dewar said. “In Ada, it is impossible.”

Ada was not perfect for the ERAM job, O’Leary said. There are more than a few things that are still needed. One is better analysis tools.

“We’re not exploiting the data” to the full extent that it could be used, he said. The component interfaces could be better. There should also be tools for automatic code generation and better cross-language support.

Nonetheless, many observers believe the basics of Ada are in place for wider use.

Use cases Who uses Ada? Not surprisingly, DOD still uses the language, particularly for command and control systems, Dewar said. About half of AdaCore’s sales are to DOD. AdaCore offers an integrated developer environment called GnatPro, and an Ada compiler.

“There [are] tens of millions of lines of Ada in Defense programs,” Dewar said.

NASA and avionics hardware manufacturers are also heavy users of Ada, he said. Anything mission-critical would be suitable for Ada. For instance, embedded systems in the Boeing 777 and 787 run Ada code.

In all these cases, the component manufacturers are “interested in highly reliable mission- critical programs. And that is the niche that Ada has found its way into,” Dewar said.

In addition to AdaCore, IBM Rational and Green Hills Software offer Ada developer environments.

It also works well as a teaching language. The Air Force Academy found it to be a good language that inexperienced programmers could use to build robust programs. At the SigAda conference, instructor Leemon Baird III showed how a student used Ada to build an artificial- intelligence function for a computer to play a game called Connect4 against human opponents.

“A great part of his success was due to Ada’s features,” Baird said.

Although it was only 2,000 lines, the language allowed the student to write robust code.

“It had to be correct,” he said. The code flowed easily between Solaris and Windows, and could be run across different types of processors with minimal porting.

Programs written in an extension of Ada, called Spark, will be used to run the next generation U.K. ground station air traffic control system, called Interim Future Area Control Tools Support (IFacts).

Praxis, a U.K. systems engineering company, is providing the operating code ---for IFacts. In 2002, England’s busiest airport terminal, London Heathrow Airport, suffered a software-based breakdown of its airplane routing system.

Praxis is under a lot of pressure to ensure its code is free from defects.

Praxis also used Spark for a 2006 National Security Agency-funded project, called the Tokeneer ID Station, said Rod Chapman, an engineer at Praxis. The idea was to create software that would meet the Common Criteria requirements for Evaluation Assurance Level 5, a process long thought to be too challenging for commercial software.

To do this, the software code that was generated had to have a low number of errors. The program itself was access control software.

Someone wishing to gain entry to a secure facility and use a workstation would need the proper smart card and provide a fingerprint.

By using Spark, a static check was made of the software before it was run, to ensure all the possible conditions led to valid outcomes. In more than 9,939 lines of code, no defects were found after the testing and remediation process was completed.

Although the original language leaned heavily toward strong typing and provability, subsequent iterations have kept Ada modernized, Dewar said. Ada 95 added object-oriented programming capabilities, and Ada 2005 tamped down on security requirements even further. The language has also been ratified as a standard by the American National Standards Institute and by the International Organization of Standards (ISO/IEC 8652).

Ada was named for Augusta Ada King, Countess of Lovelace, daughter of Lord Byron.

In the early 19th century, she published what is considered by most to be the world’s first computer program, to be run on a prototype of a computer designed by Charles Babbage, called the Analytical Engine. But don’t let the language’s historical legacy fool you — it might be just the thing to answer tomorrow’s security and reliability challenges.

Monday, April 14, 2008

Tools to access Linux Partitions from Windows

If you dual boot with Windows and Linux, and have data spread across different partitions on Linux and Windows, you should be really in for some issues.

It happens sometimes you need to access your files on Linux partitions from Windows, and you realize it isn’t possible easily. Not really, with these tools in hand - it’s very easy for you to access files on your Linux partitions from Windows

Explore2fs

Explore2fs is a GUI explorer tool for accessing ext2 and ext3 filesystems. It runs under all versions of Windows and can read almost any ext2 and ext3 filesystem.

Project Home Page :- http://www.chrysocome.net/explore2fs

It happens sometimes you need to access your files on Linux partitions from Windows, and you realize it isn’t possible easily. Not really, with these tools in hand - it’s very easy for you to access files on your Linux partitions from Windows

Explore2fs

Explore2fs is a GUI explorer tool for accessing ext2 and ext3 filesystems. It runs under all versions of Windows and can read almost any ext2 and ext3 filesystem.

Project Home Page :- http://www.chrysocome.net/explore2fs

Friday, April 4, 2008

C++ Historical Sources Archive

Abstract

This is a collection of design documents, source code, and other materials concerning the birth, development, standardization, and use of the C++ programming language.

1979 April

Work on C with Classes began

1979 October

First C with Classes (Cpre) running

1983 August

First C++ in use at Bell Labs

1984

C++ named

1985 February

Cfront Release E (first external C++ release)

1985 October

Cfront Release 1.0 (first commercial release)

The C++ Programming Language

1986

First commercial Cfront PC port (Cfront 1.1, Glockenspiel)

1987 February

Cfront Release 1.2

1987 December

First GNU C++ release (1.13)

1988

First Oregon Software C++ release [announcement]; first Zortech C++ release

1989 June

Cfront Release 2.0

1989

The Annotated C++ Reference Manual; ANSI C++ committee (J16) founded (Washington, DC)

1990

First ANSI X3J16 technical meeting (Somerset, NJ) [see group photograph, courtesy of Andrew Koenig]; templates accepted (Seattle, WA); exceptions accepted (Palo Alto, CA); first Borland C++ release

1991

First ISO WG21 meeting (Lund, Sweden); Cfront Release 3.0 (including templates); The C++ Programming Language (2nd edition)

1992

First IBM, DEC, and Microsoft C++ releases

1993

Run-time type identification accepted (Portland, Oregon); namespaces and string (templatized by character type) accepted (Munich, Germany); A History of C++: 1979-1991 published at HOPL2

1994

string (templatized by character type) (San Diego, California); the STL accepted (San Diego, CA and Waterloo, Canada)

1996

export accepted (Stockholm, Sweden)

1997

Final committee vote on the complete standard (Morristown, New Jersey)

1998

ISO C++ standard ratified

2003

Technical Corrigendum; work on C++0x started

2004

Performance technical report; Library technical report (hash tables, regular expressions, smart pointers, etc.)

2005

First votes on features for C++0x (Lillehammer, Norway); auto, static_assert, and rvalue references accepted in principle

2006

First full committee (official) votes on features for C++0x (Berlin, Germany)

This is a collection of design documents, source code, and other materials concerning the birth, development, standardization, and use of the C++ programming language.

1979 April

Work on C with Classes began

1979 October

First C with Classes (Cpre) running

1983 August

First C++ in use at Bell Labs

1984

C++ named

1985 February

Cfront Release E (first external C++ release)

1985 October

Cfront Release 1.0 (first commercial release)

The C++ Programming Language

1986

First commercial Cfront PC port (Cfront 1.1, Glockenspiel)

1987 February

Cfront Release 1.2

1987 December

First GNU C++ release (1.13)

1988

First Oregon Software C++ release [announcement]; first Zortech C++ release

1989 June

Cfront Release 2.0

1989

The Annotated C++ Reference Manual; ANSI C++ committee (J16) founded (Washington, DC)

1990

First ANSI X3J16 technical meeting (Somerset, NJ) [see group photograph, courtesy of Andrew Koenig]; templates accepted (Seattle, WA); exceptions accepted (Palo Alto, CA); first Borland C++ release

1991

First ISO WG21 meeting (Lund, Sweden); Cfront Release 3.0 (including templates); The C++ Programming Language (2nd edition)

1992

First IBM, DEC, and Microsoft C++ releases

1993

Run-time type identification accepted (Portland, Oregon); namespaces and string (templatized by character type) accepted (Munich, Germany); A History of C++: 1979-1991 published at HOPL2

1994

string (templatized by character type) (San Diego, California); the STL accepted (San Diego, CA and Waterloo, Canada)

1996

export accepted (Stockholm, Sweden)

1997

Final committee vote on the complete standard (Morristown, New Jersey)

1998

ISO C++ standard ratified

2003

Technical Corrigendum; work on C++0x started

2004

Performance technical report; Library technical report (hash tables, regular expressions, smart pointers, etc.)

2005

First votes on features for C++0x (Lillehammer, Norway); auto, static_assert, and rvalue references accepted in principle

2006

First full committee (official) votes on features for C++0x (Berlin, Germany)

Programmers At Work, 22 Years Later

In 1986, the book Programmers at Work presented interviews with 19 programmers and software designers from the early days of personal computing including Charles Simonyi, Andy Hertzfeld, Ray Ozzie, Bill Gates, and Pac Man programmer Toru Iwatani. Leonard Richardson tracked down these pioneers and has compiled a nice summary of where they are now, 22 years later.

Where Are They Now?

Charles Simonyi. Then, Microsoft programmer. Now: super-rich guy, space tourist, endowing Oxford chairs and whatnot. Works at Intentional Software.

Butler Lampson. Then, PARC dude. Now: a Microsoft Fellow.

John Warnock. Then: co-founder of Adobe. Now: retired, serves on boards of directors, apparently runs a bed and breakfast.

Gary Kildall: Then: author of CP/M. Died in 1994. The project he was working on in Programmers at Work became the first encyclopedia distributed on CD-ROM. He also hosted Computer Chronicles for a while.

Bill Gates. Then: founder of Microsoft, popularizer of the word "super". Now: richest guy in the

world. After a stint in the 90s as pure evil, semi-retired to focus on philanthropic work.

John Page. Then: co-founder of the Software Publishing Company, makers of PFS:FILE, an early database program. Now: I'm not really sure. Here's a video of him from 2006, so he's probably still alive, but he's not on the web. SPC was acquired in 1996. Through some odd corporate synergy the public face of the business now appears to be Harvard Graphics.

C. Wayne Ratliff. Then: author of dBase. Now: retired.

Dan Bricklin. Then: co-author of VisiCalc. Now: Has a weblog and lots of accessible historical information about his projects. Still runs Software Garden. Still looks almost exactly like his illustration in PaW, leading some to speculate on a "Spreadsheet of Dorian Gray" type effect. I secretly hope he will see this in referer logs and invite me to hang out with him.

Bob Frankston. Then: the other half of VisiCalc. Now: worked for Microsoft for a few years, now retired, has a weblog.

Jonathan Sachs. Then: co-author of Lotus 1-2-3. Now: semi-retired. Gives away Pocket PC software from his home page, and sells photography software as Digital Light & Color. More details in this 2004 oral history.

Ray Ozzie. Then: Lotus Symphony dude, left Lotus to write what would eventually be sold as Lotus Notes. Now: Chief Software Architect at Microsoft, after working for IBM and starting Groove Networks. Has a weblog, but hasn't posted for about a year.

Peter Roizen. Then: author of T/Maker, a spreadsheet program. Now: programmer consultant. Inventor of a Scrabble variant that uses shell glob syntax.

Bob Carr. Then: PARC Alum, Chief Scientist at Ashton-Tate, author of Framework integrated suite. Now: founder of Keep and Share. In between: co-founded Go, worked for Autodesk. Doesn't seem to have a web presence.

Jef Raskin. Then: Macintosh project creator, founder of Information Appliance. Died in 2005. His excellent web site is still up. Author of well-respected book The Humane Interface. The project he's working on in PaW, the SwyftCard, was a minor success.

Andy Hertzfeld. Then: Macintosh OS developer. Now: works at the OSAF Google and hosts a bunch of websites, including folklore.org and Susan Kare's site. (Incidentally, Susan Kare now works for Chumby.) In between: worked at General Magic and Eazel, which probably only people who read this weblog remember.

Most of the people profiled in PaW provide some sample of their programming or thought process. Hertzfeld has the best one: an assembler program that makes Susan Kare's Macintosh icons bounce around a window.

Toru Iwatani. Then: designer of Pac-Man. Now: retired from Namco in 2007. Visiting professor at a Japanese university (the University of Arts in Osaka or Tokyo Polytechnic, depending on which source you believe). In PaW very proud of a game called Libble Rabble, which I'd never heard of. I believe PaW interview was for a while the only English-language information available about Iwatani.

Significantly, in a recent interview Iwatani refused to comment on Ms. Pac-Man's relationship to Pac-Man. Possibly because Ms. Pac-Man is actually Pac-Man's transgendered clone, and Namco doesn't want word getting out.

Scott Kim. The only person mentioned in PaW I've met. Then: basically a puzzle designer. Now: still a puzzle designer. His website. Also has an interest in math education.

Jaron Lanier. Then: working on a visual programming/simulation language. Blows Susan Lammers's mind with a description of virtual reality (see also "Virtual World" in Future Stuff). Now: scholar in residence at Berkeley, occasional columnist for Discover. Lots of stuff on his website. Here's video of a game he wrote.

Where Are They Now?

Charles Simonyi. Then, Microsoft programmer. Now: super-rich guy, space tourist, endowing Oxford chairs and whatnot. Works at Intentional Software.

Butler Lampson. Then, PARC dude. Now: a Microsoft Fellow.

John Warnock. Then: co-founder of Adobe. Now: retired, serves on boards of directors, apparently runs a bed and breakfast.

Gary Kildall: Then: author of CP/M. Died in 1994. The project he was working on in Programmers at Work became the first encyclopedia distributed on CD-ROM. He also hosted Computer Chronicles for a while.

Bill Gates. Then: founder of Microsoft, popularizer of the word "super". Now: richest guy in the

world. After a stint in the 90s as pure evil, semi-retired to focus on philanthropic work.

John Page. Then: co-founder of the Software Publishing Company, makers of PFS:FILE, an early database program. Now: I'm not really sure. Here's a video of him from 2006, so he's probably still alive, but he's not on the web. SPC was acquired in 1996. Through some odd corporate synergy the public face of the business now appears to be Harvard Graphics.

C. Wayne Ratliff. Then: author of dBase. Now: retired.

Dan Bricklin. Then: co-author of VisiCalc. Now: Has a weblog and lots of accessible historical information about his projects. Still runs Software Garden. Still looks almost exactly like his illustration in PaW, leading some to speculate on a "Spreadsheet of Dorian Gray" type effect. I secretly hope he will see this in referer logs and invite me to hang out with him.

Bob Frankston. Then: the other half of VisiCalc. Now: worked for Microsoft for a few years, now retired, has a weblog.

Jonathan Sachs. Then: co-author of Lotus 1-2-3. Now: semi-retired. Gives away Pocket PC software from his home page, and sells photography software as Digital Light & Color. More details in this 2004 oral history.

Ray Ozzie. Then: Lotus Symphony dude, left Lotus to write what would eventually be sold as Lotus Notes. Now: Chief Software Architect at Microsoft, after working for IBM and starting Groove Networks. Has a weblog, but hasn't posted for about a year.

Peter Roizen. Then: author of T/Maker, a spreadsheet program. Now: programmer consultant. Inventor of a Scrabble variant that uses shell glob syntax.

Bob Carr. Then: PARC Alum, Chief Scientist at Ashton-Tate, author of Framework integrated suite. Now: founder of Keep and Share. In between: co-founded Go, worked for Autodesk. Doesn't seem to have a web presence.

Jef Raskin. Then: Macintosh project creator, founder of Information Appliance. Died in 2005. His excellent web site is still up. Author of well-respected book The Humane Interface. The project he's working on in PaW, the SwyftCard, was a minor success.

Andy Hertzfeld. Then: Macintosh OS developer. Now: works at the OSAF Google and hosts a bunch of websites, including folklore.org and Susan Kare's site. (Incidentally, Susan Kare now works for Chumby.) In between: worked at General Magic and Eazel, which probably only people who read this weblog remember.

Most of the people profiled in PaW provide some sample of their programming or thought process. Hertzfeld has the best one: an assembler program that makes Susan Kare's Macintosh icons bounce around a window.

Toru Iwatani. Then: designer of Pac-Man. Now: retired from Namco in 2007. Visiting professor at a Japanese university (the University of Arts in Osaka or Tokyo Polytechnic, depending on which source you believe). In PaW very proud of a game called Libble Rabble, which I'd never heard of. I believe PaW interview was for a while the only English-language information available about Iwatani.

Significantly, in a recent interview Iwatani refused to comment on Ms. Pac-Man's relationship to Pac-Man. Possibly because Ms. Pac-Man is actually Pac-Man's transgendered clone, and Namco doesn't want word getting out.

Scott Kim. The only person mentioned in PaW I've met. Then: basically a puzzle designer. Now: still a puzzle designer. His website. Also has an interest in math education.

Jaron Lanier. Then: working on a visual programming/simulation language. Blows Susan Lammers's mind with a description of virtual reality (see also "Virtual World" in Future Stuff). Now: scholar in residence at Berkeley, occasional columnist for Discover. Lots of stuff on his website. Here's video of a game he wrote.

Report: boot sector viruses and rootkits poised for comeback

Security firm Panda Labs has released (PDF) its malware report for the first quarter 2008. The report covers a number of topics and makes predictions about the types of attacks we may see in the future. Forecasting these trends is always tricky—no one expected the Storm Worm to explode when it did—but Panda's prediction that we may see a rise in boot sector viruses is rather surprising. We'll touch on malware first, however, and return to this topic shortly.

Thus far, adware, trojans, and miscellaneous "other" malware including dialers, viruses, and hacking tools have captured the lion's share of the "market" as it were. These three categories account for 80.55 percent of the malware Panda Labs detected over the first quarter.

Password-stealing trojans are still a growing market, and the report cautions users, as always, to be careful of their banking records... and their World of WarCraft/Lineage II passwords. It might be interesting to take a poll of hardcore World of WarCraft players and see which of these two categories they care more about protecting, but the results would likely make a parent weep. One can always make more money, after all, but raiding Sunwell Plateau is serious business.

From here, Panda Labs trots through familiar territory. The monetization of the malware market, the prevalence of JavaScript/IFrame attack vectors, and the growing number of prepackaged virus-building kits are all issues that the report raises. We've covered all of these before, but if you've not been paying attention and want to catch up on general malware trends, the report is a good place to do it. Also, just in case you missed it, social engineering-based attacks are both dangerous and effective, and social networks, particularly those based around Web 2.0, are often tempting attack targets.

Panda's report does raise a new concern, though it comes from a surprising direction. According to the company, boot sector viruses loaded with rootkits are poised to make a comeback. This honestly sounds a bit odd, considering how long it has been since a boot virus has topped the malware charts, but it's at least theoretically possible. Such viruses have a simple method of operation. The virus copies itself into the Master Boot Record (MBR) of a hard drive, and rewrites the actual MBR data in a different section of the drive.

Once a rootkit is loaded into the MBR, it can use its position to obfuscate its own activity. This is obviously rather handy when attempting to hide from rootkit-detection software, and could cause a new set of headaches for antivirus software if the threat actually materializes. Panda Lab's report does a good job of explaining what a boot virus is and how it can infect a system, but it says virtually nothing about why such attack vectors are a concern today.

The problem with boot viruses is that their attack vector is fairly well-guarded. Any antivirus program worth beans will detect a suspicious attempt to modify the MBR and will alert the end user accordingly. Running as a user rather than an administrator should also prevent such modification even if you don't have an antivirus scanner installed. Panda implies that this kind of exploit could be an issue in Linux, and I suppose that's theoretically possible, but Linux always creates a user account without root access by default.

Windows Vista, for its part, recommends that you run in user mode, even though the OS doesn't require it. Even in admin mode, a virus can't just get away with this type of modification, and UAC would pick up and flag any attempt to overwrite the MBR. Even if none of these barriers existed, there's still the issue of BIOS-enabled boot sector protection, which exists entirely to prevent this type of attack from occurring. If you want to catch a boot sector virus, in other words, you'll have to work at it.

Aside from the company's surprising conclusion regarding boot viruses, Panda Lab's report paints the picture of illegal businesses doing business as usual. In a way, this is actually a good thing. AV companies currently have their collective hands full dealing with the number of variants that are still spinning off the attacks and infections from last year, and the last thing the industry needs is for the Son of Storm to make an appearance.

Thus far, adware, trojans, and miscellaneous "other" malware including dialers, viruses, and hacking tools have captured the lion's share of the "market" as it were. These three categories account for 80.55 percent of the malware Panda Labs detected over the first quarter.

Password-stealing trojans are still a growing market, and the report cautions users, as always, to be careful of their banking records... and their World of WarCraft/Lineage II passwords. It might be interesting to take a poll of hardcore World of WarCraft players and see which of these two categories they care more about protecting, but the results would likely make a parent weep. One can always make more money, after all, but raiding Sunwell Plateau is serious business.

From here, Panda Labs trots through familiar territory. The monetization of the malware market, the prevalence of JavaScript/IFrame attack vectors, and the growing number of prepackaged virus-building kits are all issues that the report raises. We've covered all of these before, but if you've not been paying attention and want to catch up on general malware trends, the report is a good place to do it. Also, just in case you missed it, social engineering-based attacks are both dangerous and effective, and social networks, particularly those based around Web 2.0, are often tempting attack targets.

Panda's report does raise a new concern, though it comes from a surprising direction. According to the company, boot sector viruses loaded with rootkits are poised to make a comeback. This honestly sounds a bit odd, considering how long it has been since a boot virus has topped the malware charts, but it's at least theoretically possible. Such viruses have a simple method of operation. The virus copies itself into the Master Boot Record (MBR) of a hard drive, and rewrites the actual MBR data in a different section of the drive.

Once a rootkit is loaded into the MBR, it can use its position to obfuscate its own activity. This is obviously rather handy when attempting to hide from rootkit-detection software, and could cause a new set of headaches for antivirus software if the threat actually materializes. Panda Lab's report does a good job of explaining what a boot virus is and how it can infect a system, but it says virtually nothing about why such attack vectors are a concern today.

The problem with boot viruses is that their attack vector is fairly well-guarded. Any antivirus program worth beans will detect a suspicious attempt to modify the MBR and will alert the end user accordingly. Running as a user rather than an administrator should also prevent such modification even if you don't have an antivirus scanner installed. Panda implies that this kind of exploit could be an issue in Linux, and I suppose that's theoretically possible, but Linux always creates a user account without root access by default.

Windows Vista, for its part, recommends that you run in user mode, even though the OS doesn't require it. Even in admin mode, a virus can't just get away with this type of modification, and UAC would pick up and flag any attempt to overwrite the MBR. Even if none of these barriers existed, there's still the issue of BIOS-enabled boot sector protection, which exists entirely to prevent this type of attack from occurring. If you want to catch a boot sector virus, in other words, you'll have to work at it.

Aside from the company's surprising conclusion regarding boot viruses, Panda Lab's report paints the picture of illegal businesses doing business as usual. In a way, this is actually a good thing. AV companies currently have their collective hands full dealing with the number of variants that are still spinning off the attacks and infections from last year, and the last thing the industry needs is for the Son of Storm to make an appearance.

An Interview with Bjarne Stroustrup

C++ creator Bjarne Stroustrup discusses the evolving C++0x standard, the education of programmers, and the future of programming.

JB: When did you first become interested in computing, what was your first computer and what was the first program you wrote?

BS: I learned programming in my second year of university. I was signed up to do "mathematics with computers science" from the start, but I don't really remember why. I suspect that I (erroneously) thought that computing was some sort of applied math.

My fist computer was the departmental GIER computer. It was almost exclusively programmed in Algol-60. My first semi-real program plotted lines (on paper!) between points on the edge of a superellipse to create pleasant graphical designs. That was in 1970.

JB: When you created C++, was the object oriented programming (OOP) paradigm (or programming style) obviously going to gain a lot of popularity in the future, or was it a research project to find out if OOP would catch on?

BS: Neither! My firm impression (at the time) was that all sensible people "knew" that OOP didn't work in the real world: It was too slow (by more than an order of magnitude), far too difficult for programmers to use, didn't apply to real-world problems, and couldn't interact with all the rest of the code needed in a system. Actually, I'm probably being too optimistic here: "sensible people" had never heard of OOP and didn't want to hear about it.

I designed and implemented C++ because I had some problems for which it was the right solution: I needed C-style access to hardware and Simula-style program organization. It turned out that many of my colleagues had similar needs. Actually, then it was not even obvious that C would succeed. At the time, C was gaining a following, but many people still considered serious systems programming in anything but assembler adventurous and there were several languages that—like C—provided a way of writing portable systems programs. One of those others might have become dominant instead of C.

JB: Before C++, did you "just have to create C++" because of the inadequacy of other languages, for example? In essence, why did you create C++?

BS: Yes, I created C++ in response to a real need: The languages at the time didn't support abstraction for hard systems programming tasks in the way I needed it. I was trying to separate the functions of the Unix kernel so that they could run on different processors of a multi-processor or a cluster.

JB: Personally, do you think OOP is the best programming paradigm for large scale software systems, as opposed to literate programming, functional programming, procedural programming, etc.? Why?

BS: No programming paradigm is best for everything. What you have is a problem and a solution to it; then, you try to map that solution into code for execution. You do that with resource constraints and concerns for maintainability. Sometimes, that mapping is best done with OOP, sometimes with generic programming, sometimes with functional programming, etc.

OOP is appropriate where you can organize some key concepts into a hierarchy and manipulate the resulting classes through common base classes. Please note that I equate OO with the traditional use of encapsulation, inheritance, and (run time) polymorphism. You can choose alternative definitions, but this one is well-founded in history.

I don't think that literate programming is a paradigm like the others you mention. It is more of a development method like test-driven development.

C++0x

JB: In your paper, "The Design of C++0x" published in the May 2005 issue of the C/C++ User's Journal, you note that "C++'s emphasis on general features (notably classes) has been its main strength." In that paper you also mention the most change and new features will be in the Standard Library. A lot of people would like to see regular expressions, threads and the like, for example. Could you give us an idea of new classes or facilities that we can expect to see in C++0x's Standard Library?

BS: The progress on standard libraries has not been what I hoped for. We will get regular expressions, hash tables, threads, many improvements to the existing containers and algorithms, and a few more minor facilities. We will not get the networking library, the date and time library, or the file system library. These will wait until a second library TR. I had hoped for much more, but the committee has so few resources and absolutely no funding for library development.

JB: Have you or others working on C++0x had a lot of genuinely good ideas for new classes or facilities? If so, will all of them be used or will some have to be left out because of time and other constraints on developing a new standard? If that is the case, what would most likely be left out?

BS: There is no shortage of good ideas in the committee or of good libraries in the wider C++ community. There are, however, severe limits to what a group of volunteers working without funding can do. What I expect to miss most will be thread pools and the file system library. However, please note that the work will proceed beyond '09 and that many libraries are already available; for example see what boost.org has to offer.

JB: When would you expect the C++0x Standard to be published?

BS:The standard will be finished in late 2008, but it takes forever to go through all the hoops of the ISO process. So, we must face the reality that "C++0x" may become C++10.

JB: Concurrent programming is obviously going to become important in the future, because of multi-core processors and kernels that get better at distributing processes among them. Do you expect C++0x will address this, and if so, how?

BS: The new memory model and a task library was voted into C++0x in Kona. That provides a firm basis for share-memory multiprocessing as is essential for multicores. Unfortunately, it does not address higher-level models for concurrency such as thread pools and futures, shared memory parallel programming, or distributed memory parallel processing. Thread pools and futures are scheduled for something that's likely to be C++13. Shared memory can be had using Intel's Threading Building Blocks and distributed memory parallel processing is addresses by STAPL from Texas A&M University and other research systems. The important thing here is that given the well-defined and portable platform provided by the C++0x memory model and threads, many higher-level models can be provided.

Distributed programming isn't addressed, but there is a networking library scheduled for a technical report. That library is already in serious commercial use and its public domain implementation is available from boost.org.

Educating the Next Generation

JB: Do you think programmers should be "armed and dangerous" with their tools like compilers, editors, debuggers, linkers, version control systems and so on very early on in their learning or careers? Do you think that universities should teach debugging and how to program in a certain environment (e.g. Unix) as well, for example?

BS: I'm not 100% sure I understand the question, but I think "yes." I don't think that it should be possible to graduate with a computer science, computer engineering, etc. degree without having used the basic tools you mention above for a couple of major projects. However, it is possible. In some famous universities, I have observed that Computer Science is the only degree you can get without writing a program.

I'm not arguing for mere training. The use of tools must not be a substitute for a good understanding of the principles of programming. Someone who knows all the power-tools well, but not the fundamental principles of software development would indeed be "armed and dangerous." Let me point to algorithms, data structures, and machine architecture as essentials. I'd also like to see a good understanding of operating systems and networking.

Some educators will point out that all of that—together with ever-popular and useful topics such as graphics and security—doesn't fit into a four-year program for most students. Something will have to give! I agree, but I think what should give is the idea that four years is enough to produce a well-rounded software developer: Let's aim to make a five-or-six-year masters the first degree considered sufficient.

JB: What should C++ programmers or any programmer do, in your view, before sitting down to write a substantial software program?

BS: Think. Discuss with colleagues and potential users. Get a good first-order understanding of the problem domain. If possible, try to be a user of an existing system in that field. Then, without too much further agonizing, try to build a simplified system to try out the fundamental ideas of a design. That "simplified system" might become a throwaway experiment or it may become the nucleus of a complete system. I'm a great fan of the idea of "growing" a system from simpler, less complete, but working and tested systems. To try out all the tool chains before making too grand plans.

How would the programmer, designer, team get those "fundamental ideas of a design"? Experience, knowledge of similar systems, of tools, and of libraries is a major part of the answer. The idea of a single developer carefully planning to write a system from the bare programming language has realistically been a myth for decades. David Wheeler wrote the first paper about how to design libraries in 1951—56 years ago!

JB: What type of programs do you personally enjoy writing? What programs have you written recently?

BS: These days I don't get enough time to write code, but I think writing libraries is the most fun. I wrote a small library supporting N-dimensional arrays with the usual mathematical operations. I have also been playing with regular expressions using the (draft) C++0x library (the boost.org version).

I also write a lot of little programs to test aspects of the language, but that's more work than fun, and also small programs to explore application domains that I haven't tried or haven't tried lately. It is a rare week that I don't write some code.

JB: In your experience, have there been any features of C++ that newcomers to the language have had the most difficulty with? Would you have any advice for newcomers to C++ or have you found a way of teaching difficult features of C++ saving students a lot of trial and error?

BS: Some trial and error is inevitable, and may even be good for the newcomer, but yes I have some experience introducing C++ to individuals and organizations—some of it successful. I don't think it's the features that are hard to learn, it is the understanding of the programming paradigms that cause trouble. I'm continuously amazed at how novices (of all backgrounds and experiences) come to C++ with fully formed and firm ideas of how the language should be used. For example, some come convinced that any techniques not easily used in C is inherently wrongheaded, hard to use, and very inefficient. It's amazing what people are willing to firmly assert without measurements and often based on briefly looking at C++ a decade ago using a compiler that was hardly out of beta—or simply based on other people's assertions without checking if they have a basis in reality. Conversely, there is now a generation who is firmly convinced that a program is only well-designed if just about everything is part of a class hierarchy and just about every decision is delayed to run-time. Obviously programs written by these "true OO" programmers become the best arguments for the "stick to C" programmers. Eventually, a "true OO" programmer will find that C++ has many features that don't serve their needs and that they indeed fail to gain that fabled efficiency.

To use C++ well, you have to use a mix of techniques; to learn C++ without undue pain and unnecessary effort, you must see how the language features serve the programming styles (the programming paradigms). Try to see concepts of an application as classes. It's not really hard when you don't worry too much about class hierarchies, templates, etc. until you have to.

Learn to use the language features to solve simple programs at first. That might sound trivial, but I receive many questions from people who have "studied C++" for a couple of weeks and are seriously confused by examples of multiple inheritance using names like B1, B2, D, D2, f, and mf. They are—usually without knowing it—trying to become language lawyers rather than programmers. I don't need multiple inheritance all that often, and certainly not the tricky parts. It is far more important to get a feel for writing a good constructor to establish an invariant and understand when to define a destructor and copy operations. My point is that the latter is almost instantly useful and not difficult (I can teach it to someone who has never programmed after a month). The finer details of inheritance and templates, on the other hand, are almost impenetrable until you have a real-world program that needs it. In The Art of Computer Programming Don Knuth apologizes for not giving good examples of co-routines, because their advantages are not obvious in small programs. Many C++ features are like that in that: They don't make sense until you face a problem of the kind and scale that needs that feature.

JB: Do you have any suggestions for people who are not programmers and want to learn how to program, and want to learn C++ as their first language? For instance, there's a book called Accelerated C++: Practical Programming by Example by Andrew Koenig and Barbara E. Moo. This book's approach is to teach by using the STL and advanced features at an early stage, like using strings, vectors and so on, with the aim of writing "real" programs faster. Would you agree that it's best to begin "the C++ way" if you could call it that, instead of starting off with a strictly procedural style and leaving classes, the Standard Library and other features often preceded with "an introduction to OOP" much later in a book?

BS: I have had to consider this question "for real" and have had the opportunity to observe the effects of my theories. I designed and repeatedly taught a freshman programming course at Texas A&M University. I use standard library features, such as string, vector, and sort, from the first week. I don't emphasize STL; I just use the facilities to have better types with which to introduce the usual control structures and programming techniques. I emphasize correctness and error handling from day 1. I show how to build a couple of simple types in lecture 6 (week three). I show much of the mechanisms for defining classes in lectures 8 and 9 together with the ways of using them. By lecture 10 and 11, I have the students using iostreams on files. By then, they are tired, but can read a file of structured temperature data and extract information from it. They can do it 6 weeks after seeing their first line of code. I emphasize the use of classes as a way of structuring code to make it easier to get right.

After that comes graphics, including some use of class hierarchies, and then comes the STL. Yes, you can do that with complete beginners in a semester. We have by now done that for more than 1,000 students. The reason for putting the STL after graphics is purely pedagogical: after iostreams the students are thoroughly tired of "calculations and CS stuff," but doing graphics is a treat! The fact that they need the basics of OOP to do that graphics is a minor detail. They can now graph the data read and fill class objects from a GUI interface.

JB: I read an interview in Texas A&M Engineering Magazine where you said, "I decided to design a first programming course after seeing how many computer science students—including students from top schools—lacked fundamental skills needed to design and implement quality software..." What were these fundamental skills students lacked, and what did you put in your programming course to address this issue?

BS: I saw so many students who simply didn't have the notion that code itself is a topic of interest and that well-structured code is a major time saver. The notion of organizing code to be sure that it is correct and maybe even for someone else to use and modify is alien: They see code as simply something needed to hand in the answers to an exercise. I am of course not talking about all students or just students from one university or from one country.

In my course I heavily emphasize structure, correctness, and define the purpose of the course as "becoming able to produce code good enough for the use of others." I use data structures and algorithms, but the topic of the course is "programming" not fiddling with pointers to implement a doubly linked list.

And yes, I do teach pointers, arrays, and casts, but not until well after strings, vectors, and class design. You need to give the students a feel of the machine as well as the mechanisms to make the (correct) use of the machine simple. This also reflects comments I have repeatedly had from industry: that they have a shortage of developers who understand "the machine" and "systems."

JB: You have said that a programmer must be able to think clearly, understand questions and express solutions. This is in agreement with G. Polya's thesis that you must have a clear and complete understanding of a question before you can ever hope to solve it. Would you recommend supplementary general reading like G. Polya's book, How to Solve It along with reading books on programming and technique? If so, what books would you recommend?

BS: I avoid teaching "how to think." I suspect that's best taught through lots of good examples. So I give lots of good examples (to set a standard) including examples of gradual development of a program from early imperfect versions. I'm not saying anything against Polya's ideas, but I don't have the room for it in my approach. The problem with designing a course (or a curriculum) is more what to leave out than what to add.

JB: What are the most useful mathematical skills, generally, that a programmer should have an understanding of if they intend to become professional, in your view? Or would there be different mathematical skills a programmer should know for different programmers and different tasks? If this is the case could you give examples?

BS: I don't know. I think of math as a splendid way to learn to think straight. Exactly what math to learn and exactly where what kinds of math can be applied is secondary to me.

The Future of C++

JB: Your research group is looking into parallel and distributed systems. From this research, have any new ideas for the new C++0x standard come about?

BS: Not yet. The gap between a research result and a tool that can be part of an international standard is enormous. Together with Gabriel Dos Reis at TAMU, I have worked on the representation of C++ aiming at better program analysis and transformation (eventually to be applied to distributed system code). That will become important some day. A couple of my grad students analyzed the old problem of multi-methods (calling a function selected based on two or more dynamic types) and found a solution that can be integrated into C++ and performs better in time and space than any workaround. Together with Michael Gibbs from Lockheed-Martin, I developed a fast, constant-time, dynamic cast implementation. This work points to a future beyond C++0x.

JB: You have some thoughts on how programming can be improved, generally. What are they?

BS: There is immense scope for improvement. A better education is a start. I think that theory and practice have become dissociated in many cases, with predictably poor results. However, we should not fool ourselves into seeing education and/or training as a panacea. There are on the order of 10 million programmers "out there" and little agreement on how to improve education. In the early days of C++, I worried a lot about "not being able to teach teachers fast enough." I had reason to worry because much of the obvious poor use of C++ can be traced to fundamental misunderstandings among educators. I obviously failed to articulate my ideals and principles sufficiently. Given that the problems are not restricted to C++, I'm not alone in that. As far as I can see, every large programming community suffers, so the problem is one of scale.

"Better programming languages" is one popular answer, but with a new language you start by spending the better part of a decade to rebuild existing infrastructure and community, and the advance comes at the cost of existing languages: At least some of the energy and resources spent for the new language would have been spent on improving old ones.

There are so many other areas where significant improvements are possible: IDEs, libraries, design methods, domain specific languages, etc. However, to succeed, we must not lose sight of programming. We must remember that the aim is to produce working, correct, and well-performing code. That may sound trite, but I am continuously shocked over how little code is discussed and presented at some conferences that claim to be concerned with software development.

JB: An area of interest for you is multi-paradigm (or multiple-style) programming. Could you explain what this is for you, what you have been doing with multi-paradigm programming lately and do you have any examples of the usefulness of multi-paradigm programming?

BS: Almost all that I do in C++ is "multi-paradigm." I really have to find a better name for that, but consider: I invariably use containers (preferably standard library (STL) containers); they are parameterized on types and supported by algorithms. That's generic programming. On the other hand, I can't remember when I last wrote a significant program without some class hierarchies. That's object-oriented programming. Put pointers to base classes in containers and you have a mixture of GP and OOP that is simpler, less error prone, more flexible, and more efficient than what could be done exclusively in GP or exclusively in OOP. I also tend to use a lot of little free-standing types, such as Color and Point. That's basic data abstraction and such types are of course used in class hierarchies and in containers.

JB: You are looking at making C++ better for systems programming in C++0x as I understand it, is that correct? If so, what general facilities or features are you thinking about for making C++ a great systems language?

BS: Correct. The most direct answer involves features that directly support systems programming, such as thread local storage and atomic types. However, the more significant part is improvements to the abstraction mechanism that will allow cleaner code for higher-level operations and better organization of code without time or space overheads compared to low-level code. A simple example of that is generalized constant expressions that allows compile-time evaluate of functions and guaranteed compile-time initialization of memory. An obvious use of that is to put objects in ROM. C++0x also offers a fair number of "small features" that without adding to run-time costs make the language better for writing demanding code, such as static assertions, rvalue references (for more efficient argument passing) and improved enumerations.

Finally, C++0x will provide better support for generic programming and since generic programming using templates has become key to high performance libraries, this will help systems programming also. For example, here we find concepts (a type system for types and combinations of types) to improve static checking of template code.

JB: You feel strongly about better education for software developers. Would you say, generally, that education in computer programming is appalling? Or so-so? If you were to design a course for high school students and a course (an entire degree) for university students intending to become professional, what would you include in these courses and what would you emphasize?